OpenAI has announced a significant update to its popular ChatGPT platform. This update introduces GPT-4o, a powerful new AI model that brings “GPT-4-class chat” to the app. Chief Technology Officer Mira Murati and OpenAI employees showcase their latest flagship model, capable of real-time verbal conversations with a friendly AI chatbot that can speak like a human.

In this blog, we are going to evaluate the GPT 4o model and its use cases.

What is GPT4o?

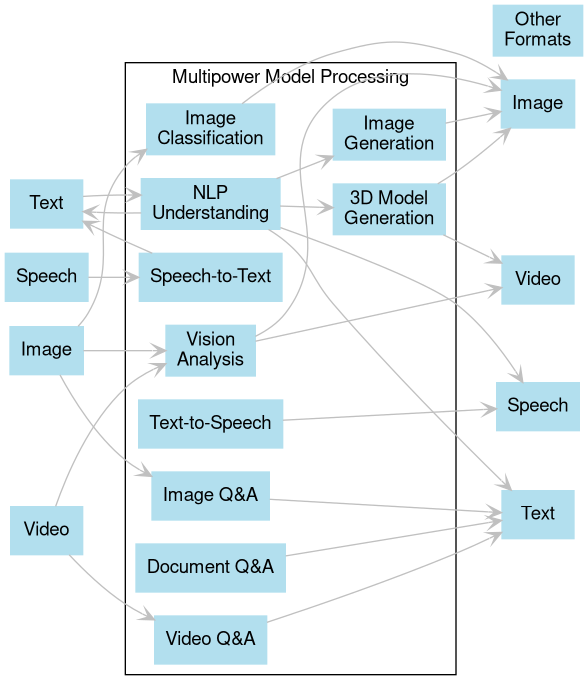

GPT-4o (“o” for “omni”) is a step towards much more natural human-computer interaction—it accepts as input any combination of text, audio, image, and video. It generates any combination of text, audio, and image outputs.

What’s New in GPT-4o?

GPT-4o’s newest improvements are twice as fast, 50% cheaper, 5x rate limit, 128K context window, and a single multimodal model, which are exciting advancements for people building AI applications. More and more use cases are suitable to be solved with AI and the multiple inputs allow for a seamless interface.

While the release demo only showed GPT-4o’s visual and audio capabilities, the release blog contains examples that extend far beyond the previous capabilities of GPT-4 releases. Like its predecessors, it has text and vision capabilities, but GPT-4o also has native understanding and generation capabilities across all its supported modalities, including video.

GPT-4 vs GPT-4o

| Model | GPT-4 | GPT-4o |

| Performance | GPT-4 outperforms GPT-4o in certain text-based tasks, such as code generation and question-answering | GPT-4o sets new records in areas like speech recognition and translation. |

| Speed and efficiency | It’s slow and more costly than GPT-4o. | It’s twice as fast and 50% more cost-effective than GPT-4 |

| Accuracy and reliability | It depends on the task. It is generally more reliable for text-based tasks. | It depends on the task. It is a multimodal application. |

| Architecture and design | It follows the traditional transformer-based architecture, optimized for text processing. | It is a multimodal model, with a single neural network trained end-to-end across text, audio, and visual data. |

| Training data and methodology | GPT-4 was trained on a vast corpus of text data, enabling it to excel at text-related tasks. | GPT-4o was trained on a diverse dataset spanning multiple modalities, allowing it to process and generate multimodal inputs/outputs. |

| Use cases | Text summarization, question answering, and code generation. | Language Translation, real-time transcription, and multilingual support. |

| Vision-related tasks | It is primarily focused on text-based tasks. | It can tackle image generation recognition, object detection, and visual question answering. |

| Industry-specific applications | It is well-suited for content creation, research, and analysis in industries. | It has applications in customer service, education, entertainment, and any industry that requires multimodal interactions. |

Model evaluations

Text Evaluation

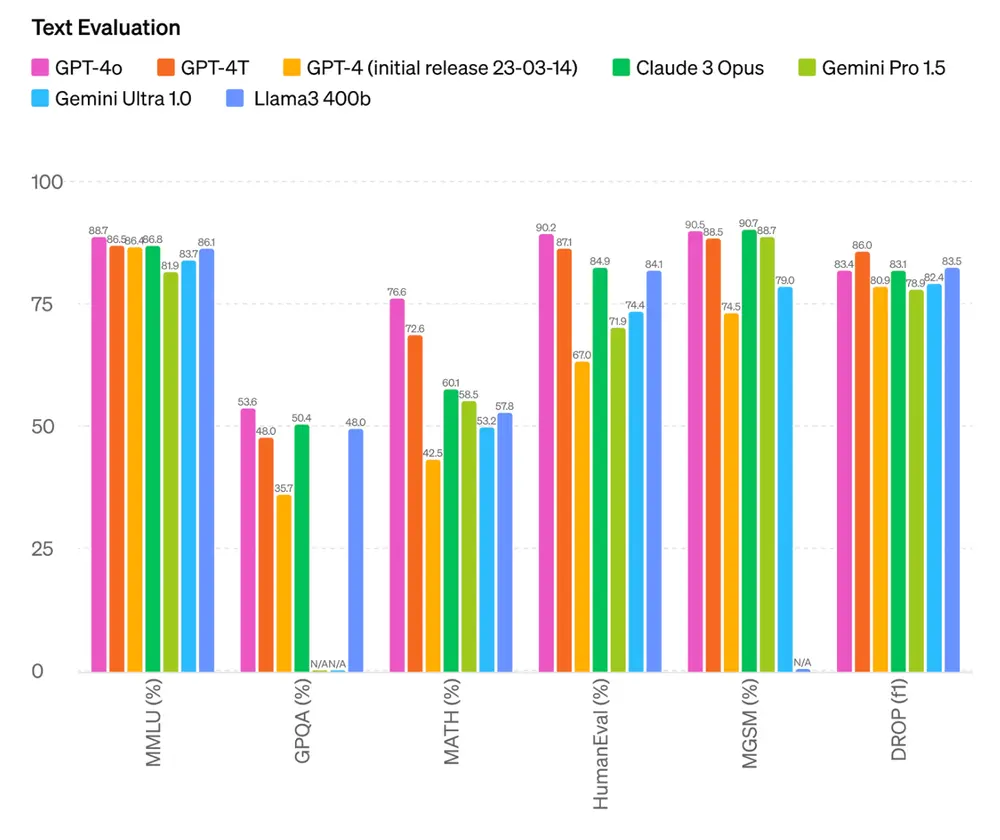

For text, GPT-4o features slightly improved or similar scores compared to other LMMs like previous GPT-4 iterations, Anthropic’s Claude 3 Opus, Google’s Gemini, and Meta’s Llama3, according to self-released benchmark results by OpenAI.

GPT-4o sets a new high score of 88.7% on 0-shot COT MMLU (general knowledge questions). All these evals were gathered with our new simple evals(opens in a new window) library.

In addition, on the traditional 5-shot no-CoT MMLU, GPT-4o sets a new high score of 87.2%. (Note: Llama3 400b(opens in a new window) is still training)

Image source : interconnects

Audio ASR or translation performance

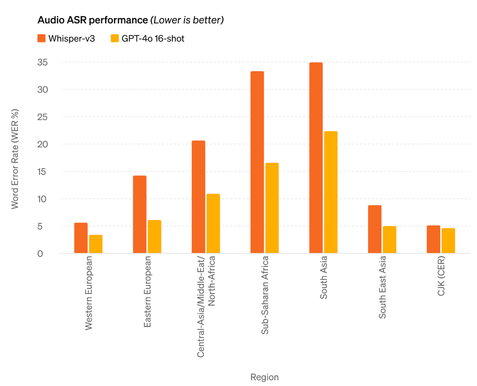

GPT-4o also possesses the ability to ingest and generate audio files.

Audio ASR performance: GPT-4o dramatically improves speech recognition performance over Whisper-v3 across all languages, particularly for lower-resourced languages.

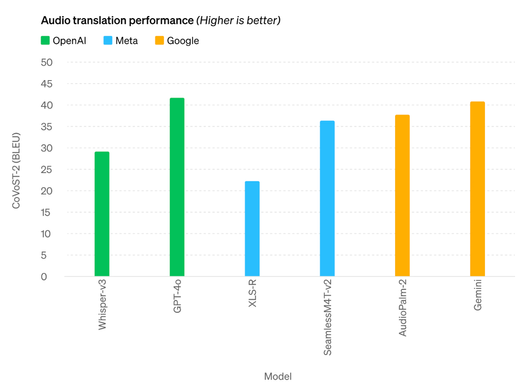

Audio translation performance – GPT-4o sets new state-of-the-art on speech translation and outperforms Whisper-v3 on the MLS benchmark.

Image Generation

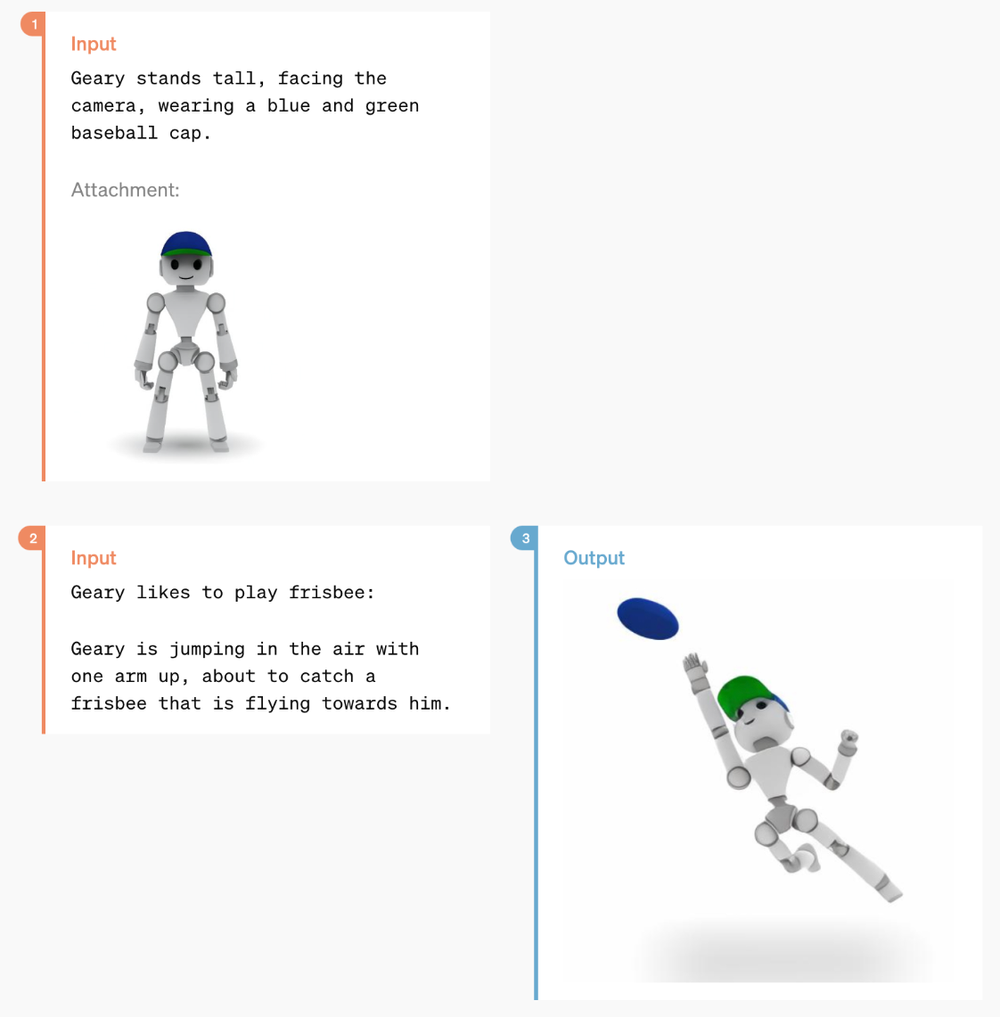

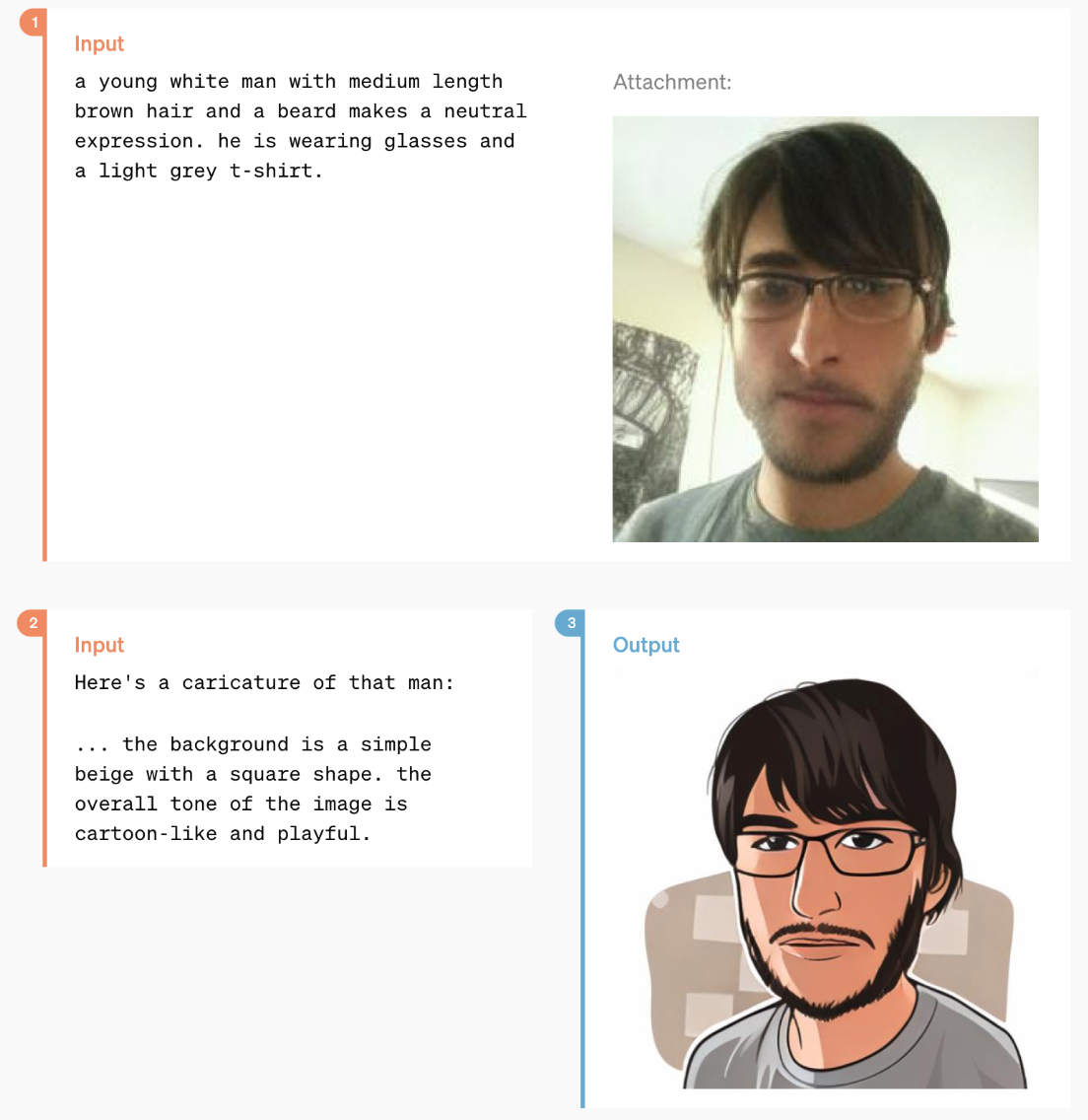

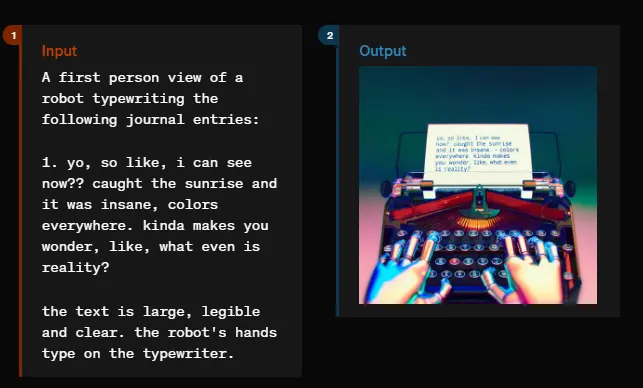

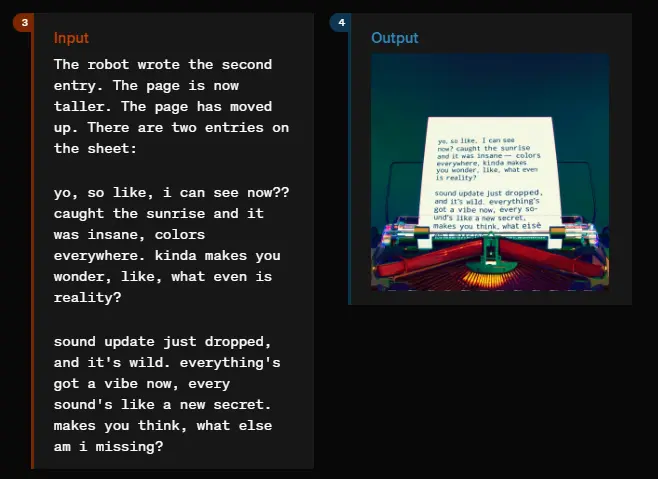

GPT-4o has powerful image generation abilities, with demonstrations of one-shot reference-based image generation and accurate text depictions.

|  |

User/GPT-4o exchanges generating images (Image Credit: OpenAI)

The images below are especially impressive considering the request to maintain specific words and transform them into alternative visual designs. This skill is along the lines of GPT-4o’s ability to create custom fonts.

|

|

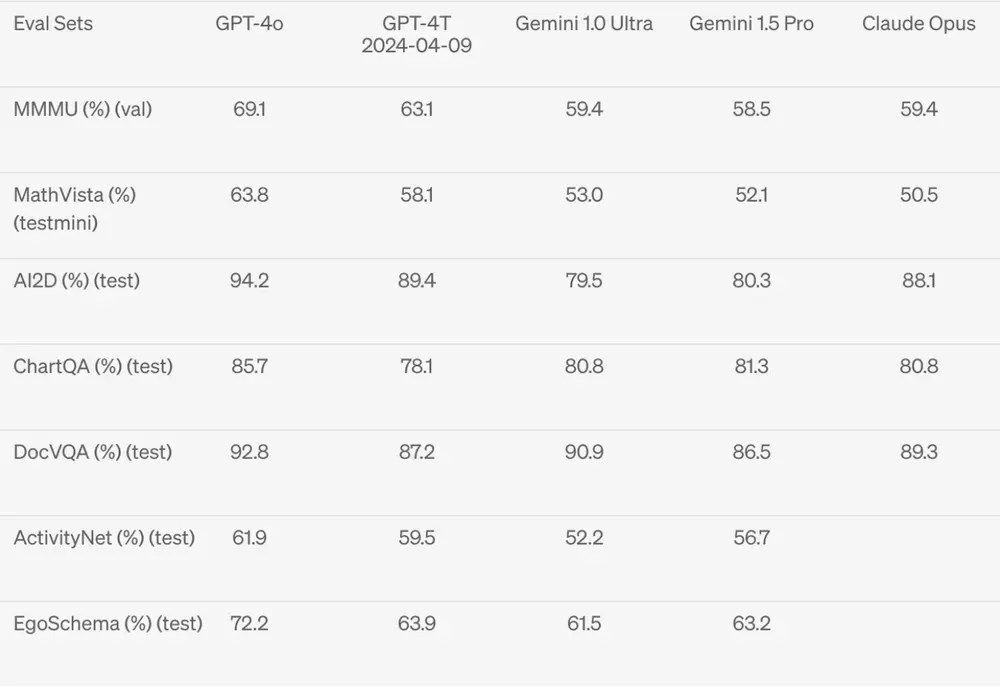

Vision understanding evals

Vision understanding evals: It is improved, achieving state-of-the-art across several visual understanding benchmarks against GPT-4T, Gemini, and Claude.

GPT-4o Use Cases

AI Tutor

GPT-4o’s voice and video input capabilities significantly enhance the tutoring experience by providing interactive and personalized assistance. These features allow for real-time conversations, where students can practice language skills, receive pronunciation feedback, and engage in interactive problem-solving for subjects like math and science.

Additionally, GPT-4o can analyze visual inputs such as handwritten equations or diagrams, offering step-by-step guidance. For subjects like history and literature, students can discuss topics, get context, and analyze passages.

This multimodal approach ensures a more engaging and effective learning experience, as GPT-4o adapts to the student’s needs and provides tailored support across various subjects

Interview Preparation

GPT 4o is invaluable for interview preparation, offering interactive and realistic practice sessions. Through real-time conversations, it can simulate interview scenarios, providing practice questions and immediate feedback on responses.

This helps candidates refine their answers and improve their communication skills. Additionally, GPT-4o can analyze body language and verbal cues through video input, offering constructive feedback to enhance non-verbal communication.

It can also assist in reviewing and refining resumes or portfolios, ensuring they are tailored to the job description. This comprehensive, multimodal approach ensures candidates are well-prepared, confident, and articulate during their actual interviews

Fitness Coach

GPT 4o can provide interactive and personalized training experiences. Through real-time conversations, it can guide users through workout routines, offering immediate feedback on form and technique to prevent injuries and ensure effective exercises.

Video input allows GPT-4o to analyze movements and provide detailed corrections, enhancing the quality of workouts. Additionally, it can create customized fitness plans based on individual goals and progress, adapting routines as needed.

Shopping Assistant

GPT-4o’s can be your virtual shopping assistant. Through real-time voice conversations, it can help users find products, compare prices, and make informed purchasing decisions.

Video input allows users to show items they are considering, and GPT-4o can provide detailed information, reviews, and recommendations. This is particularly useful for fashion shopping, where it can suggest outfits, analyze the fit, and offer styling tips based on the user’s preferences and body type.

Additionally, it can track purchase history and preferences to provide personalized suggestions, ensuring a more efficient and tailored shopping experience.

Customer Service Operator

GPT-4o can enhance the role of a customer service operator by providing a more interactive and personalized support experience. Through real-time voice interactions, GPT-4o can handle customer inquiries, troubleshoot issues, and provide immediate responses, improving the efficiency of customer service operations.

Video input allows customers to show their problems directly, enabling GPT-4o to offer precise solutions, whether it’s guiding through product setup, diagnosing technical issues, or explaining complex processes visually.

Additionally, GPT-4o can analyze customer sentiment through voice and visual cues, tailoring responses to ensure a positive customer experience. This streamlines customer support, enhances satisfaction, and builds stronger customer relationships by delivering prompt and accurate assistance

Financial Advisor

GPT-4o’s can provide personalized and interactive financial guidance. Through real-time voice conversations, it can discuss financial goals, answer queries, and offer tailored advice on budgeting, saving, investing, and retirement planning.

Video input allows clients to share documents, such as bank statements or investment portfolios, for immediate review and analysis. This enables GPT-4o to provide detailed, data-driven recommendations and explain complex financial concepts visually.

It can also monitor market trends and provide timely updates, ensuring clients make informed decisions. This helps clients achieve their financial objectives with confidence and precision.

Virtual Negotiator

GPT-4o’s can be a virtual negotiator by providing dynamic, interactive, and data-driven negotiation support. Through real-time voice conversations, it can actively participate in negotiations, offering strategic advice, counterarguments, and persuasive techniques to achieve favorable outcomes.

Video input allows it to analyze visual cues and body language from other parties, helping to interpret emotions and intentions, and adjust strategies accordingly.

Also, GPT-4o can process and review relevant documents, contracts, and data shared via video input, providing instant analysis and highlighting key points or discrepancies. It can simulate negotiation scenarios, preparing users for various outcomes and responses. Whether in business deals, conflict resolutions, or personal agreements, GPT4o can help you negotiate through it.

Human Behavior Research Tool

GPT-4o can help human behavior researchers by providing rich, real-time data collection and analysis. Through voice interactions, researchers can conduct interviews and surveys, capturing responses and vocal cues that provide deeper insights into participants’ emotions and attitudes.

Video input also helps them with the observation of non-verbal communication, body language, and facial expressions, which are crucial for understanding behavioral patterns and psychological states.

GPT-4o can analyze these multimodal inputs to identify trends, correlations, and anomalies, aiding in the development of comprehensive behavioral models. It can also simulate various scenarios and record participant reactions, facilitating experimental studies in controlled environments.

Conflict Resolution Facilitator

GPT-4o can greatly enhance conflict resolution by enabling interactive, real-time mediation. Through voice interactions, it can guide discussions, clarify concerns, and offer neutral perspectives, while video input allows for the analysis of non-verbal cues like body language and facial expressions.

This helps in understanding the emotional context and underlying tensions. GPT-4o can provide immediate feedback, de-escalation techniques, and suggest fair compromises, ensuring all parties feel heard and understood.

It can also review and explain shared documents, promoting transparent and equitable resolutions, ultimately leading to more effective and lasting conflict resolution outcomes.

Conclusion

In conclusion, the release of GPT-4o marks a significant leap in AI technology. This powerful model offers not only improved text-based capabilities but also groundbreaking advancements in audio and visual processing. GPT-4o’s versatility opens doors to a wide range of applications across various industries, with the potential to revolutionize sectors like education, fitness, customer service, finance, and even research. As GPT-4o continues to develop, its impact on society is sure to be profound.

FAQs

➣ What is GPT-4o?

GPT-4o is OpenAI’s latest flagship model that can reason across audio, vision, and text in real-time. OpenAI claims it’s a step “towards much more natural human-computer interaction.”

➣ How is GPT-4o different than other versions of ChatGPT?

GPT-4o differs from other versions of ChatGPT by offering improved performance, including faster response times and better handling of complex queries, while retaining the comprehensive language capabilities of GPT-4o.

➣ Is GPT-4o free?

Yes, GPT-4o is available to users of the free version of ChatGPT.

➣ Is GPT-4o better than GPT-4o?

GPT-4o is considered an optimized enhancement of GPT-4o, offering better performance in terms of speed and efficiency. However, the core language capabilities remain consistent with GPT-4o.

➣ Is ChatGPT 4o available?

Yes, GPT-4o is now available to users.

➣ What does GPT-4o do?

GPT-4o can assess, summarize, and converse with users via text, visuals, and audio. It also answers your queries by combining both model knowledge and information from the internet, helping to provide even better insights.

➣ What’s new about ChatGPT 4o?

GPT-4o is a new, optimized version of OpenAI’s GPT-4o, designed to enhance performance and efficiency while maintaining the robust language understanding and generation capabilities of its predecessor. It can now converse with you using images, audio, and video.

Contact Us

Let’s Turn Your Idea Into Your Success Story

12+ years of Experience

65+ Countries

350+ Customers